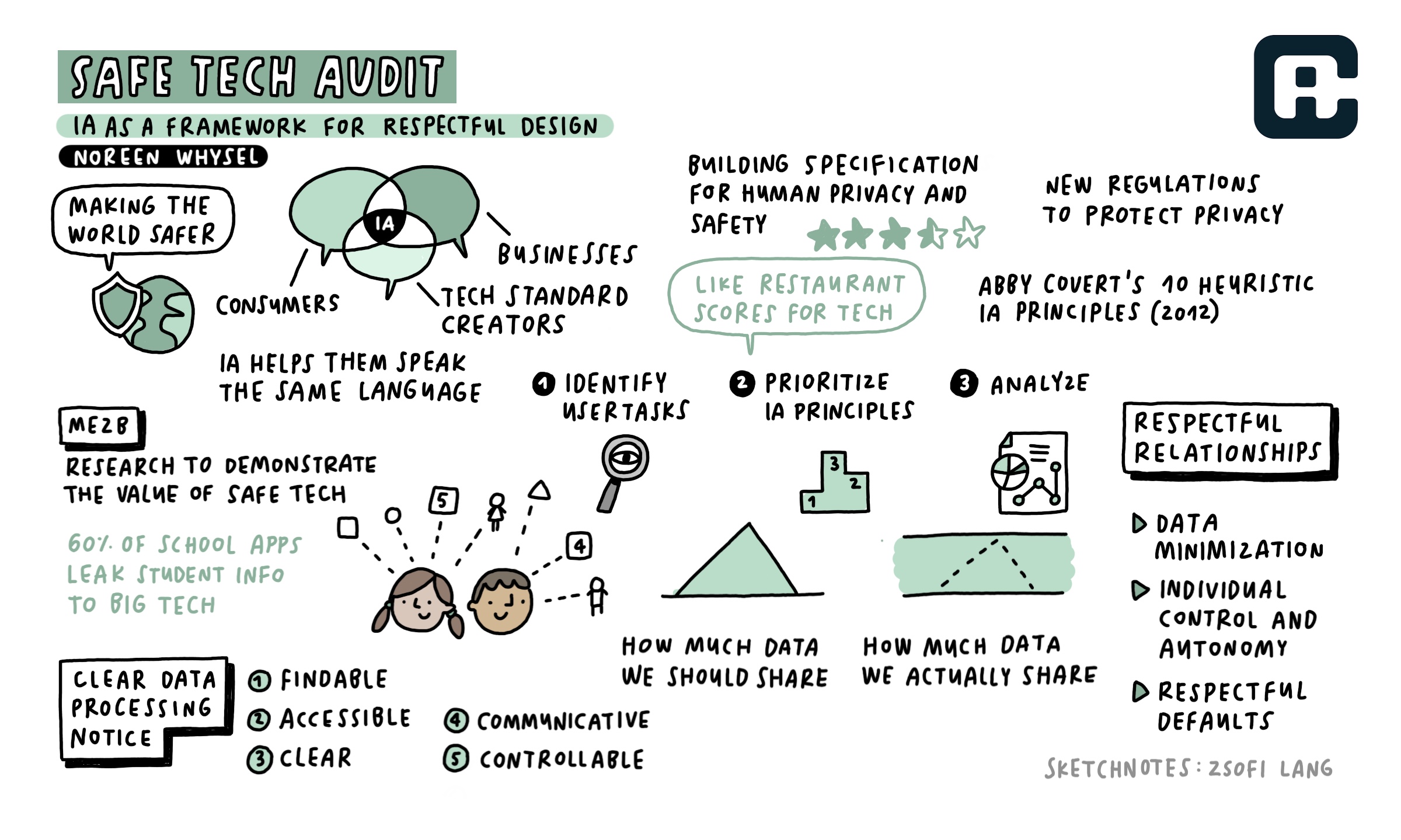

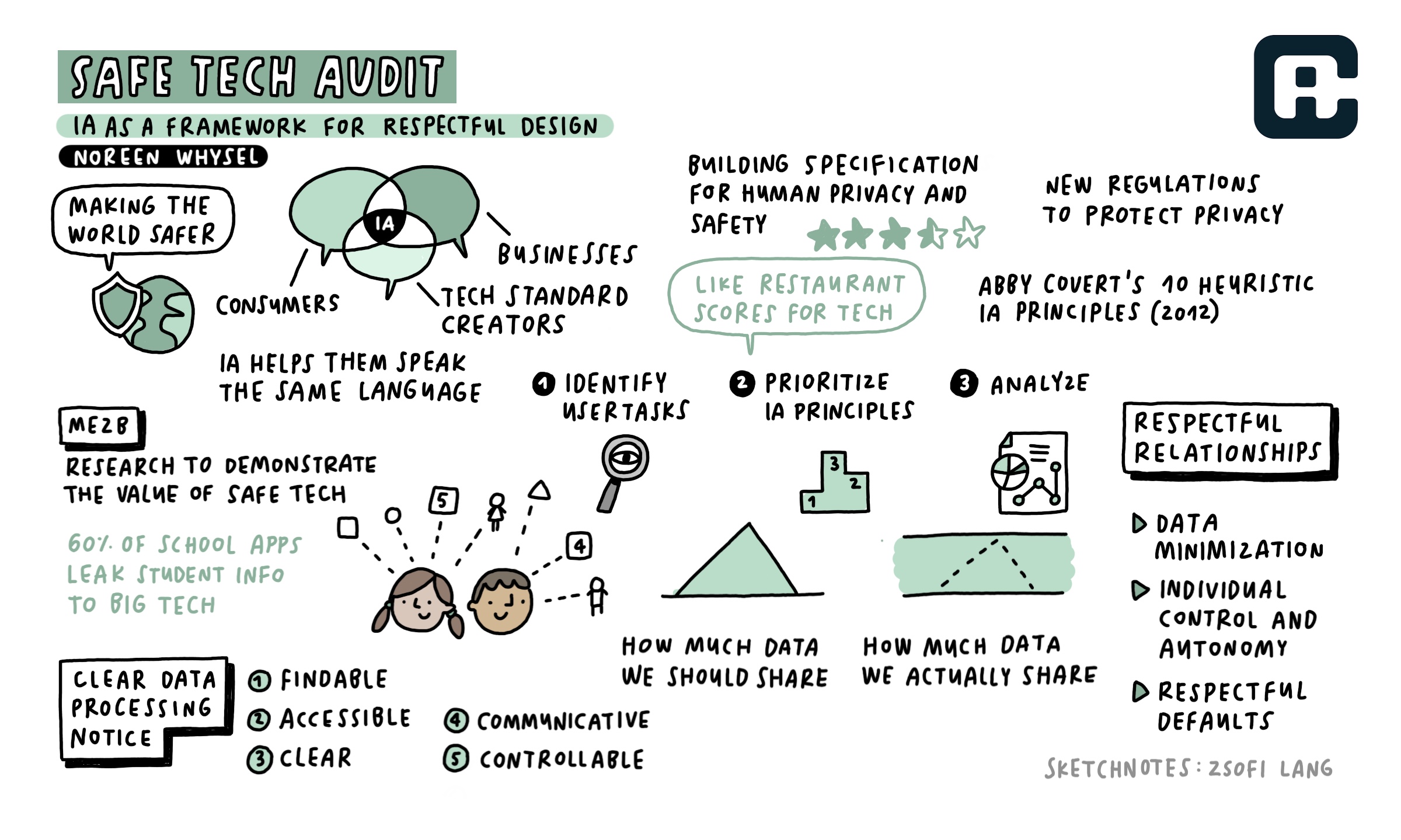

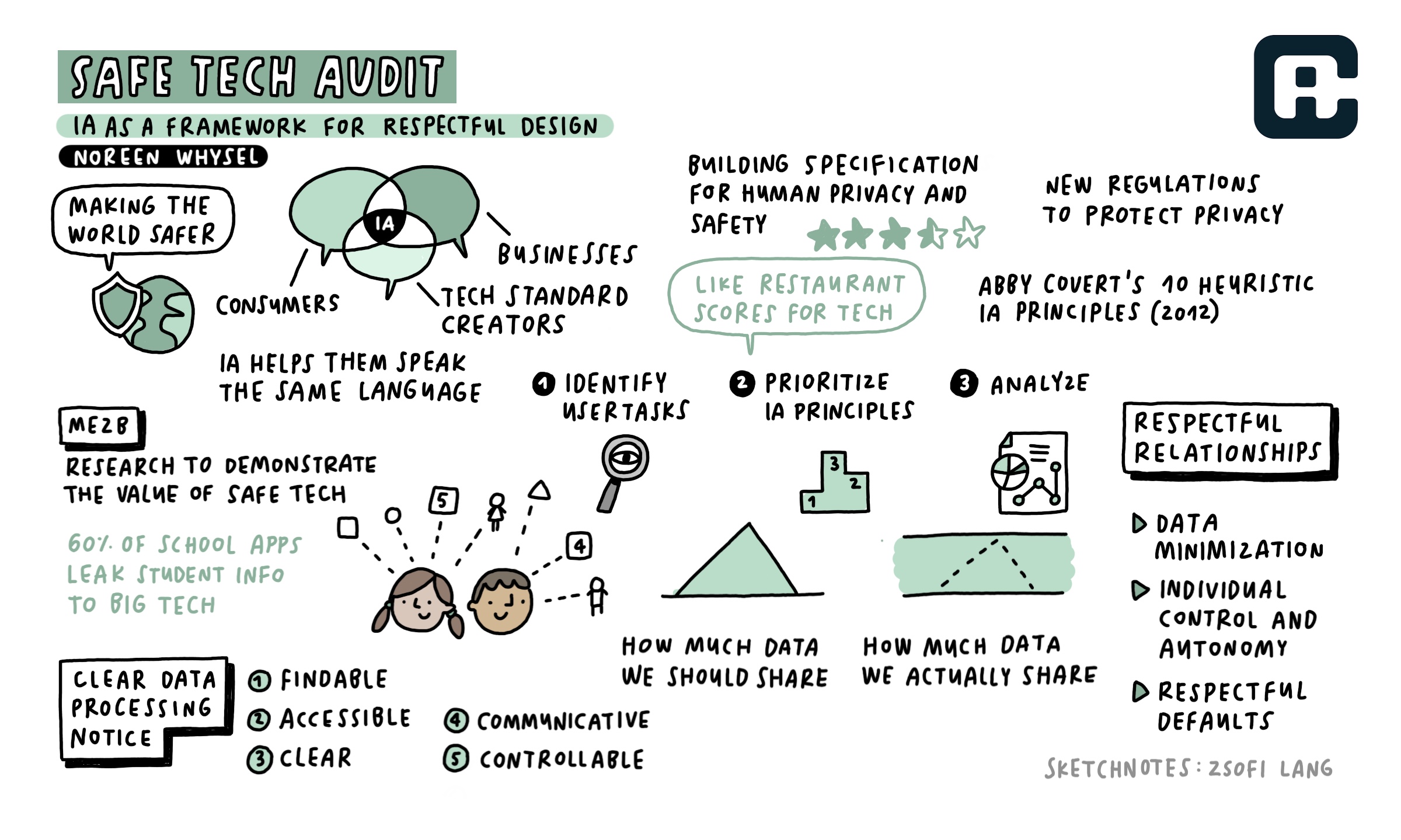

Zsofi Lang’s Sketchnotes from my talk “Safe Tech Audit: IA as a Framework for Respectful Design” from The Information Architecture Conference 2022:

Information Architect, UX Researcher, COO, CPO, Instructor, Facilitator

Zsofi Lang’s Sketchnotes from my talk “Safe Tech Audit: IA as a Framework for Respectful Design” from The Information Architecture Conference 2022:

Zsofi Lang’s Sketchnotes from my talk “Safe Tech Audit: IA as a Framework for Respectful Design” from The Information Architecture Conference 2022:

Watch me and Internet pioneer Vint Cerf discuss the future of the Metaverse at Disruptive Technologists NYC.

Note: this article was originally published as Designing Respectful Tech: What is your relationship with technology? at Boxes and Arrows on February 24, 2022

You’ve been there before. You thought you could trust someone with a secret. You thought it would be safe, but found out later that they blabbed to everyone. Or, maybe they didn’t share it, but the way they used it felt manipulative. You gave more than you got and it didn’t feel fair. But now that it’s out there, do you even have control anymore?

Ok. Now imagine that person was your supermarket.

Or your doctor.

Or your boss.

According to research at the Me2B Alliance, people do feel they have a relationship with technology. It’s emotional. It’s embodied. And it’s very personal.

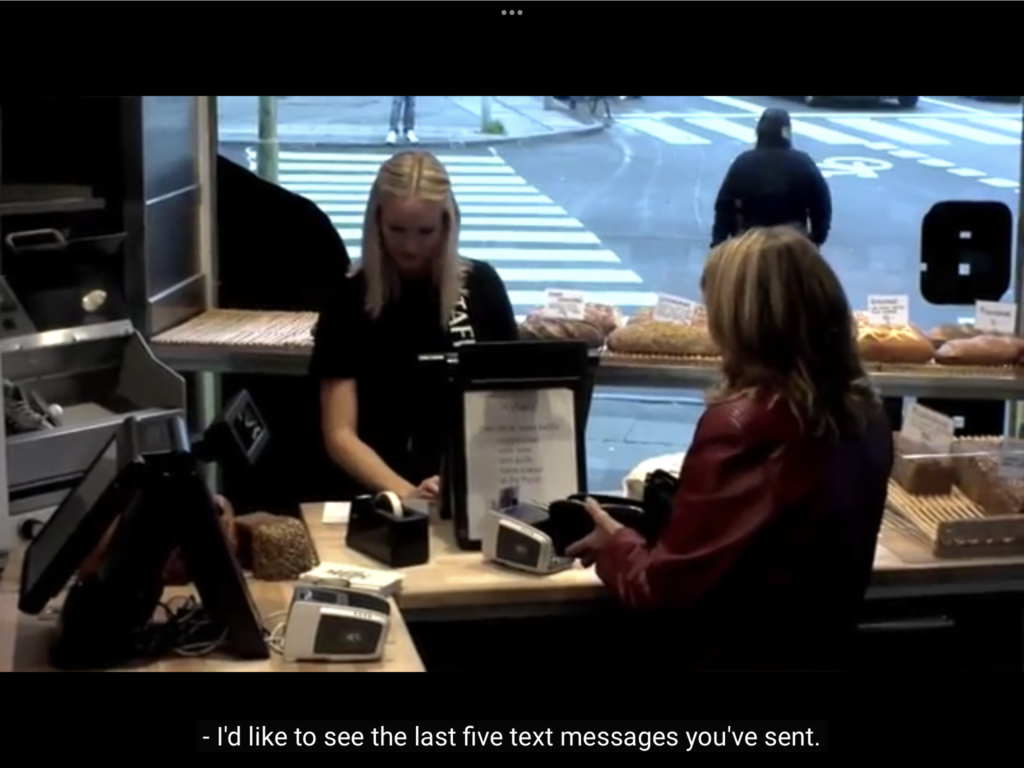

How personal is it? Think about what it would be like if you placed an order at a cafe and they already knew your name, your email, your gender, your physical location, what you read, who you are dating, and that, maybe, you’ve been thinking of breaking up.

We don’t approve of gossipy behavior in our human relationships. So why do we accept it with technology? Sure, we get back some time and convenience, but in many ways it can feel locked in and unequal.

At the Me2B Alliance, we are studying digital relationships to answer questions like “Do people have a relationship with technology?” (They feel that they do). “What does that relationship feel like?” (It’s complicated). And “Do people understand the commitments that they are making when they explore, enter into and dissolve these relationships?” (They really don’t).

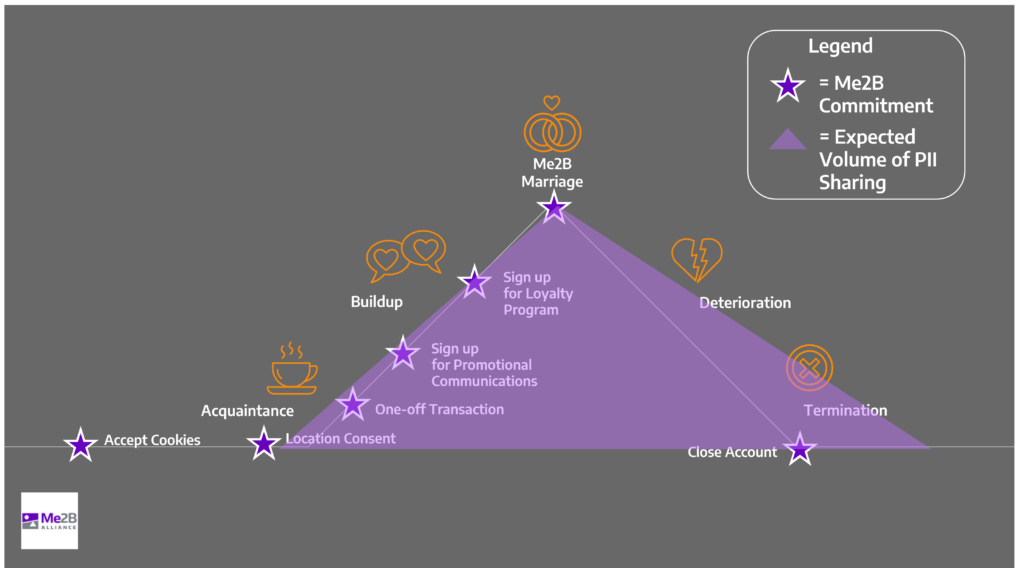

It may seem silly or awkward to think about our dealings with technology as a relationship, but like messy human relationships there are parallels. The Me2BA commitment arc with a digital technology resembles German psychologist George Levenger’s ABCDE relationship model 1, shown by the Orange icons in the image below. As with human relationships, we move through states of discovery, commitment and breakup with digital applications, too.

Our assumptions about our technology relationships are similar to the ones we have about our human ones. We assume when we first meet someone there is a clean slate, but this isn’t always true. There may be gossip about you ahead of your meeting. The other person may have looked you up on LinkedIn. With any technology, information about you may be known already, and sharing that data starts well before you sign up for an account.

Today’s news frequently covers stories of personal and societal harm caused by digital media manipulation, dark patterns and personal data mapping. Last year, Facebook whistleblowerFrances Hauser exposed how the platform promotes content that they know from their own research causes depression and self-harm in teenage girls. They know this because they know what teenage girls click, post and share.

Technology enables data sharing at every point of the relationship arc, including after you stop using it. Worryingly, even our more trusted digital relationships may not be safe. The Me2B Alliance uncovered privacy violations in K-12 software, and described how abandoned website domains put children and families at risk when their schools forget to renew them.

Most of the technologies that you (and your children) use have relationships with third party data brokers and others with whom they share your data. Each privacy policy, cookie consent and terms of use document on every website or mobile app you use defines a legal relationship, whether you choose to opt in or are locked in by some other process. That means you have a legal relationship with each of these entities from the moment you accessed the app or website, and in most cases, it’s one that you initiated and agreed to.

All the little bits of our digital experiences are floating out there and will stay out there unless we have the agency to set how that data can be used or shared and when it should be deleted. The Me2B Alliance has developed Rules of Engagement for respectful technology relationships and a Digital Harms Dictionary outlining types of violations, such as:

Respectful technology relationships begin with minimizing the amount of data that is collected in the first place. Data minimization reduces the harmful effects of sensitive data getting into the wrong hands.

Next, we should give people agency and control. Individual control over one’s data is a key part of local and international privacy laws like GDPR in Europe, and similar laws in California, Coloradoand Virginia, which give consumers the right to consent to data collection, to know what data of theirs is collected and to request to view the data that was collected, correct it, or to have it permanently deleted.

In his short story, I, Robot, Isaac Asimov introduced the famous “Three Laws of Robotics,” an ethical framework to avoid harmful consequences of machine activity. Today, IAs, programmers and other digital creators make what are essentially robots that help users do work and share information. Much of this activity is out of sight and mind, which is in fact how we, the digital technology users, like it.

But what of the risks? It is important as designers of these machines to consider the consequences of the work we put into the world. I have proposed the following corollary to Asimov’s robotics laws:

Mike Monteiro in his well-known 2014 talk at An Event Apart on How Designers are Destroying the World discusses the second and third law a lot. While we take orders from the stakeholders of our work—the client, the marketers and the shareholders we design for—we have an equal and greater responsibility to understand and mitigate design decisions that have negative effects.

The Me2B Alliance is working on a specification for safe and respectfully designed digital technologies—technologies that Do No Harm. These product integrity tests are conducted by a UX Expert and applied to each commitment stage that a person enters. These stages range from first-open, location awareness, cookie consent, promotional and loyalty commitments, and account creation, as well as the termination of the relationship.

Abby Covert’s IA Principles—particularly Findable, Accessible, Clear, Communicative and Controllable—are remarkably appropriate tests for ensuring that the people who use digital technologies have agency and control over the data they entrust to these products:

Findable: Are the legal documents that govern the technology relationship easy to find? What about support services for when I believe my data is incorrect, or being used inappropriately? Can I find a way to delete my account or delete my data?

Accessible: Are these resources easy to access by both human and machine readers and assistive devices? Are they hidden behind some “data paywall” such as a process that requires a change of commitment state, i.e. a data toll, to access?

Clear: Can the average user read and understand the information that explains what data is required for what purpose? Is this information visible or accessible when it is relevant?

Communicative: Does the technology inform the user when the commitment status changes? For example, does it communicate when it needs to access my location or other personal information like age, gender, medical conditions? Does it explain why it needs my data and how to revoke data access when it is no longer necessary?

Controllable: How much control do I have as a user? Can I freely enter into a Me2B Commitment or am I forced to give up some data just to find out what the Me2B Deal is in the first place?

Abby’s other IA principles flow from the above considerations. A Useful product is one that does what it claims to do and communicates the deal you get clearly and accessibly. A Credible product is one that treats the user with respect and communicates its value. With user Control over data sharing and a clear understanding of the service being offered, the true Value of the service is apparent.

Over time the user will come to expect notice of potential changes to commitment states and will have agency over making that choice. These “Helpful Patterns”—clear and discoverable notice of state changes and opt-in commitments—build trust and loyalty, leading to a Delightful, or at least a reassuring, experience for your users.

What I’ve learned from working in the standards world is that Information Architecture Principles provide a solid framework for understanding digital relationships as well as structuring meaning. Because we aren’t just designing information spaces. We’re designing healthy relationships.

1 Levinger, G. (1983). “Development and change.” In H.H. Kelley et al. (Eds.), Close relationships (315–359). New York: W. H. Freeman and Company. https://www.worldcat.org/title/close-relationships/oclc/470636389

2 Asimov, I. (1950). I, Robot. Gnome Press.

In the research I’ve been doing on respectful technology relationships at the Me2B Alliance, it’s a combination of “I’ve got nothing to hide” and “I’ve got no other option”. People are deeply entangled in their technology relationships. Even when presented with overwhelmingly bad scores on Terms of Service and Privacy Policies, they will continue to use products they depend on or that give them access to their family, community, and in the case of Amazon an abundance of choice, entertainment and low prices. Even when they abandon a digital product or service, they are unlikely to delete their accounts. And the adtech SDKs they’ve agreed to track them keep on tracking.

The IA Conference ended its four week run, which as some of you may recall was originally a five day event In New Orleans with 12 preconference workshops and 60 talks in three tracks. The format changed to all prerecorded talks released in three tracks daily over a period of three weeks. We put the plenaries on Mondays and Fridays and special programming, like panel talks and poster sessions, on Wednesdays. We used Slack for daily AMAs and Zoom for weekend watch parties and Q&A sessions with plenaries. Other social and mentoring activities took place mornings, weekends and evenings.

The workshops which usually come first were all moved to the fourth week except for Jorge Arango’s IA Essentials. We had a lot of student scholarship attendees and didn’t want to make them wait until after the main conference.

We have a lot of amazing people to thank for puling it off, starting with dozens of volunteers whose stamina is inspiring. I honestly wasn’t sure we could hold people that long. But Jared Spool thought we could do it and Cheryl at Rosenfeld Media gave us some valuable advice about connecting through online platforms.

So, what did we do? Check out this presentation “Rapid Switch: How we turned a five day onsite event into a monthlong, online celebration,” presented at the 500 Members Celebration of the Digital Collaboration Practitioners.

June 6-8 was OpenStreetMap’s State of the Map Conference at the United Nations. I volunteered at registration and during morning sessions and was able to attend interesting talks on OSM data in Wikipedia, the Red Cross presentation on OSM in disaster response and developing a GIS curriculum in higher education.

One of the highlights was a satellite selfie. Led by a team from DigitalGlobe, a group of about 20 attendees created a large UN-blue circle on the ground and waited for the WorldView 3 and GOI1 satellites to flyover for a routine scan. Orbiting at 15,000 miles per hour about 400 kilometers above Manhattan, the WorldView 3 was expected to take images that would include UN Plaza. The resulting satellite image collected at 11:44am is available on the CartoDB blog (image above), courtesy of CartoDB CEO, Javier de la Torre. Huge thanks to Josh Winer of DigitalGlobe who took time to explain the physics of satellite imagery and kept us entertained while we waited for our not-so-closeup.

Two upcoming events brought to you by GISMO and OWASP Brooklyn.

GISMO

Thursday, February 5, 2015

100 Church Street

2:30 – 4:30 PM

Join us to hear a round of lightning talks given by GIS directors and managers of City agencies. This will be a great opportunity to learn about innovative GIS projects and programs being implemented across municipal government, and to meet with colleagues from other agencies.

GISMO and the Municipal Information Technology Council (MITC) are co-hosting this event. MITC is the organization of City IT professionals. This get together is a unique opportunity to learn about how NYC’s nation leading GIS program is progressing and to have direct contact with the City GIS leadership and staff.

Registration Required

Attendance at this meeting will be by RSVP only and is reserved exclusively for GISMO members*, MITC members and City agency GIS personnel. Please register early as there are a limited number of seats available, and this event will fill up fast!

* GISMO membership means paid dues for the 2014 – 2015 membership year. People on the GISMO mailing list who do not satisfy the above criteria will have to join GISMO and pay their dues in order to attend.

Register at http://www.gismonyc.org/events/next-event/

Technology Transfer – Creating Cultures of Innovation

OWASP Brooklyn

Saturday, February 28, 2015

2:00 PM to 6:00 PM

NYU Poly Pfizer Auditorium

5 MetroTech Center, Brooklyn, NY

To attend this event, you must also register on Eventbrite at this URL to get your ticket: https://www.eventbrite.com/e/technology-transfer-creating-cultures-of-innovation-tickets-15406554419

Description: TBD

Facilitator – Moderator: TBD

Speakers:

Zach Tudor, SRI, Author of Technology Transfer: Crossing the “Valley of Death” – his research on Cybersecurity startups will discuss “Creating Cultures of Innovation” Bio: http://www.csl.sri.com/people/tudor/

Professor Nasir Memon, NYU Polytechnic will discuss NYU Poly’s Technology Transfer. Bio: http://isis.poly.edu/memon/

Brett Scharringhausen, USCENTCOM CCJ8-Science & Technology Chief, Discovery & Integration will discuss CENTCOM Requirements.

Ryan Letts, Veterans Advisor, Brooklyn SBDC CityTech will present and discuss SBA research on Veterans Startups in NY.

Presentation to Digital Humanities class at Pratt Institute, covering the history of computing in the field of archaeology and current digital humanities projects.

Over the next couple of days I am going to post summaries of Internet Week sessions that I attended last month. Here is the first, a panel on open government in NYC:

In June, I attended a panel on Open Goverment in NYC, hosted by Time Warner as part of Internet Week NYC. I joined my friend, Queens Community Board 3 member, Tom Lowenhaupt, who has been advocating for a .NYC top level domain for over ten years. Given some of the road blocks he has faced in his campaign, I knew attending an Open Government forum with him would be interesting.

Presented in a panel format, the event focused on Setting the Digital Standard for open government. NYC Deputy Mayor for Operations Stephen Goldsmith introduced Jesse Hempel, a senior writer for Fortune Magazine, who moderated a panel of experts in government information technology including:

Commissioner Post opened with a brief description of the city’s plans for a digital roadmap, including a range of web 2.0 tools that allows the City to to communicate and join with citizens to make a better city, break through hardened boundaries between people, neighborhoods, agencies, etc. “The fundamental responsibility of government being to allow access to information,” she said.

An example is the “311 to Text” project, a platform for app developers, that allows the power of the 311 service to reach a mobile community, in particular, underpriviledged people whose primary access to city information and the internet at large is via their mobile phones. Carole indicated that the 311 phone channel has been very successful, so it is a priority of her office to get the message about the system and its various access points out to users.

There is even a 311 iPhone app (clearly for those who can afford an iPhone). I have a neighbor who would love that.

Chief Digital Officer, Rachel Sterne, the 27-year-old founder of citizen journalism site GroundReport and an adjunct professor of social media and entrepreneurship at the Columbia Business School, described the City’s digital domain, reaching 2.8 million on NYC.gov, 1.4 million on social media. Some of these include @nycgov, an umbrella Twitter account for the city, @nycmayorsoffice, which also has its own hashtag #askmike, referring to Mayor Michael Bloomberg. In addition, 311 NYC Twitter account (@311nyc) focuses on service related questions, maintaining a public record of issues. Agencies also have twitter accounts, the and similar department Twitter feeds). The primary focus for these efforts is access, open government, engagement and accessibility.

NYC Economic Development Corporation President, Seth Pinsky, took a turn explaining his view of the role of technology in NYC. “New technology lowers the barriers to entry in industries where NYC is traditionally a leader. It helps industries transition to new business models, promoting entrepreneurship, and highlight talented workforce,” he said.

Adam Sharp of Twitter discussed how he sees governments using Twitter and compared how NYC measures up. “Technology is good at wholesaling mass audience, and is scalable,” he said. But there is a growing gap of people who have actually met their pubic servants. There has been confusion about who to contact for which services. Technology like Twitter allows increased interaction and communication with public figures.

Mr. Sharp sees innovation particularly at the federal level. USGS is using Twitter to spot seismographic activity 2 minutes earlier. The agency has seismographs in various parts of the country that measure earthquake activity with feedback within 60 seconds of the quake; however, they have found that often tweets from citizens experiencing quakes allow them to locate possible activity faster, so they have set up the experimental Twitter Earthquake Detection project to test how well Twitter can work in reporting possible disasters. I had tweeted about this myself the day before. For a more local example, Adam noted that Newark, NJ’s mayor was using it for response for snow removal during winter storms, showing up Mayor Bloomberg a bit after this past year’s storm mitigation problem, and which citizens were quite vocal about on the social media at the time.

|

| Image from Recover.gov, U.S. Dept of the Interior. |

Mr. Pinsky described social media in government as a process. The first generation of tools are designed to get the information out to the public (like press releases, broadcasts). The second generation gets information from the public (web forms, phone systems). The third generation, where we are now, is interaction: the open and ongoing conversation between government and citizens (Twitter, Big Apps Challenge, Change By Us – a new initiative launched on July 7, 2010, which is very similar to the Institute for Urban Design’s By the City, For the City app). The hard part to all this, says Seth, is linking incoming and outgoing messages to see the conversation. That is something that needs some work.

Ms. Sterne said that the BigApps competition was launched in response to the significant amount of data at agencies that was either not available to the public or not really being used by the agencies. Big Apps gets that data out to development community to create applications that citizens can use. This in turn, spurred the creation of several businesses, which is in line with the City’s push toward fostering a friendlier environment for entrepreneurship in New York City, a noticeable part of PlaNYC 2030, the City’s plan for a “greener, greater New York,” with a ideas for improving the quality of life and economic welfare of New York City.

The second round of the Big Apps Competition this year, doubled the number of data sets and had a much higher number of responses from developers and sponsors. This year, sponsor BMW doubled its prize offering and set up venture capital fund for startup businesses.

Some of the Big Apps Winners:

At this point, Ms. Hempel began asking directed questions tot he panelists. She asked panelist Adam Sterne how much government activity on Twitter is citizen led. He said that this it isn’t necessarily happening yet, but will happen when government sees it as more than a broadcast forum, and when it relieves pressure on other platforms, such as calls. Cities are learning lessons from the private sector about adopting a customer service approach, proactively looking for mentions of their brands and engaging customers. It is a messaging and marketing focus that will evolve as more initiatives like BigApps and Change By Us come into the public eye.

Ms. Hempel asked Carole Post how and whether each City agencies interact with each other. Post stated that the mission of agencies is not to exist in a silo, an she encourages interaction with other agencies and the digital office. As someone who has been involved with data advocacy through GISMO, I can certainly attest to how far we’ve come in getting agencies to talk. It took years to get a working, flexible base map of the city and years longer to get data shared in a meaningful way. I worked on the City Information Technology Initiative for the Municipal Art Society in 2004 to demonstrate to local community boards the power of layering GIS data on a map, with the goal of eventually getting an open, accessible map onto the Department of City Planning website. NYCityMap was launched in 2006.

Ms. Post says that agencies have called DoITT to see what of their data they can pull out for public mining. She sees more and more city workers are finding venues to pull folks together outside of their daily mission, sort of like what Drive author, Daniel H. Pink advocates. They may not know what the end result will be, but they do make the data available and see what happens. Agencies have come to terms with the fact that these days people expect government to be “open and unlocked.”

Mr. Pinsky said that BigApps 3.0 will offer opportunity to significantly expand this initiative, with more sponsorship money and new law that opens up even more data. “This allows the marketplace to select the most effective app for different datasets,” he said. “When citizens judge the apps they are selecting most effective one.”

Twitter’s Adam Sharp commented on NYC’s approach to innovation. “The proof is in the result,” he said. “Great third party developer ecosystems help tear down walls and barriers to development.”

And now the citizen response. Ms. Hempel passed around a microphone to allow audience members to ask questions. Here are some of the interesting ones:

What is NYC doing for multi language community?

Carole Post responded that 311 exists as a gateway to services. It is offered in 170 languages, with a language line translator. 311 online and NYC.gov also prioritizes language access.

How about expanding access to people who don’t have iPhone or Internet?

Ms Post said that $40 million of federal stimulus fund is going toward expanding broadband access and knowledge of how technology can improve education and communication. The City plans to extend access through libraries and community centers.

Ms. Sterne also says that public/private partnerships are working to improve access. Mobile strategy, SMS and text information for those who don’t have broadband at home

Mr. Pinsky ensured us that the City has not plans for eliminating the phone system, and that they plan technical improvements to that system as well.

Mr. Sharp says that “fast follow” via text messaging means that you don’t even need to have a twitter account in order to get information.

How do people find out about these services?

Ms. Sterne said that they do outreach through the New York City Housing Authority and other NYC programs, as well as through public/private partnerships.

How does the City plan to leverage the acquisition of .NYC TLD?

Ms. Post answered that DoITT is “ready to capitalize on .NYC.” (The City, in fact, committed to acquiring the .NYC TLD in 2009). Mr. Pinsky added that a .NYC TLD represents “…an opportunity to allow locally based company to brand and associate themselves with NYC.” The long term goal is to use the initiative to promote NYC innovation.

Tom pressed. “NYC has apparently decided on what the economic development plans for .NYC is, what about public input?” He asked.

Mr. Pinsky said that they are looking to public to help, and that it will be a collaborative process. However, no one on the panel seemed ready to talk about what their plans are yet.

Okay. I was waiting for my friend Tom to ask a question about the .NYC Top Level Domain, and he wasn’t going to take any one-line talking point. Tom’s vision is to have a .NYC top level domain that gives citizens a geocoded directory of information about resources their neighborhood and the city at large. Not just businesses shouting “I am NYC!” but civic groups, community services, block associations and personal websites. I added privately that with the 128-bit IPv6 internet, which expands the possible addresses (Wikipedia’s article on IPv6 cites 2128 or 340 undecillion – 3.4